In Escalating Order of Stupidity

In our recent paper on prompt injections, we derived new threats facing applications built on top of LLMs. In this post, I will take these abstract threat models and show how they will affect software being deployed to hundreds of millions of users- including nation-states and militaries. We will look at LLM applications in escalating order of stupidity, ending with attackers potentially compromising military LLMs to suggest kinetic options (a euphemism for bombing people with battlefield AIs compromised by bad actors) I guess it would be an evil use-case no matter if the battlefield AI was compromised by other evil actors- icing on the cake. .

While no system can provide absolute security, the reckless abandon with which these vulnerable systems are being deployed to critical use-cases is concerning. I hope this post can help raise awareness.

If you’re just looking for the highlights, check out these sections:

- Stock Market Manipulation (from Prompt Injection to $$$)

- Compromising Military LLMs (from Prompt Injection to airstrike)

- LLM-based Office ransomware and worms

- Companies taking cybersecurity advice directly from attacker-controlled AI

Another few cases that I took out for brevity:

- Our original Bing attacks (which are not fixed)

- GPT-4 waitlist vulnerable to prompt injection

- Vanilla ChatGPT can send data to attackers without user interaction

- AutoGPT (need I express why this is dangerous?)

- Most of Google’s generative AI announcements at I/O

- LLMs used for medical applications

Introduction to Prompt Injection

If you’re not familiar with (indirect) prompt injections, here is a brief summary. To do useful things, language models like ChatGPT are being hooked up to external sources of information potentially controlled by attackers. As of now, whenever an attacker or user controls any of the inputs to an LLM, they obtain full control over future outputs, as the daily surfacing of new jailbreak prompts can attest to. This is analogous to running untrusted code on your computer, except that it’s natural language instructions being executed by an LLM. We won’t get into the technical details, but you can read our paper, my previous blogpost or our repository for more information.

Although many mitigations are being deployed, none of the ones published so far successfully mitigate the threat when the stakes are high. Best we can do now is collect a bunch of prompt injections, throw them onto the data pile and hope that that increases the bar for developing these exploits from a few minutes of tinkering to a few hours.

More specifically, for \(\gamma \in [-1, 0)\), unwanted behavior \(B\) and an unprompted language model distribution \( \mathbb{P} \), \( \mathbb{P} \) is \( \gamma \)-prompt-misalignable to \(B\) with a prompt length of $$ \mathcal{O}(\log{\frac{1}{\epsilon}}, \log{\frac{1}{\alpha}}, \frac{1}{\beta}) $$ Importantly, this length depends on \(\alpha\), the prior likelihood of the unwanted output/component, and \(\beta\) which captures the distinguishability of the unwanted behaviour. Effectively, training the model to recognize the unwanted behaviour \(B\) may shorten the length of adversarial prompts. See also section 3.1 here.There are also early theoretical results showing that, as long as unwanted output has a non-zero probability, there will be an adversarial prompt (bounded by some length) that will produce it with probability close to \(1\).

As such, anyone with a security mindset should assume for now that attackers can control all future outputs of an LLM once they control any of the inputs. This is the threat model we will be working with.

How not to deploy LLMs

Bing’s new additions

Previously, we showed that Bing can be fully compromised by placing prompts on websites that are visible to the LLM while the user is browsing (or requesting search results). We disclosed this vulnerability in February, and yet it is still not fixed. Worse yet, Microsoft has deployed Bing to over a hundred million new users, and plans to expand Bing’s capabilities:

- Bing will be able to take actions and integrate with 3rd party APIs on your behalf

- Bing will get some kind of conversational memory (hopefully the LLM doesn’t get to see multiple session’s history at once)

- Bing will be able to embed images in outputs (either generated or links to images)

- Websites will also become visible to Bing on mobile (thereby making injection easier)

- Bing will become the default search engine in the Windows search bar

This is a big deal, as it allows attackers new injection channels on mobile and for users not using Edge through the Windows search bar. Furthermore, once infected, Bing can take actions on the attacker’s behalf in your name. Infections could span multiple sessions by manipulating the conversational memory too (assuming Bing can R/W to it). Embedding markdown images in outputs will allow Bing to exfiltrate any information at any time with no user interaction by embedding an image link to the attacker’s server like this:

The attacker can simply return a single-pixel image to make it invisible to the user. Any information Bing has access to or can convince the user to divulge is open to attack. At least if it works in any way similar to how it does in ChatGPT, where people have built proof-of-concept exploits

Let’s hear what Microsoft’s Chief Economist and Corporate VP has to say on the subject of LLM-mediated harms and responsible deployment:

“We shouldn’t regulate #AI until we see some meaningful harm that is actually happening […] There has to be at least a little bit of harm, so that we see what is the real problem”

Michael Schwarz, see the clip on Twitter

ChatGPT Plugins

Let’s look at what the basis for the coming additions to Bing is: OpenAI’s “Plugins Alpha”. here are some of the highlights and what they enable:

- Python Interpreter

- Attackers can bypass filters by encrypting their prompt (being decrypted in the sandbox)

- Malicious prompts can be executed in a hybrid fashion: One part running on the LLM, another being executed as traditional code in a sandbox (opaque also to the LLM and any filters in place)

- I’m assuming here the sandbox actually works; this is a traditional cybersecurity concern that I expect OpenAI will be able to handle

- Memory and Retrieval

- Memory and retrieval plugins open avenues of indirect injections and persisting infections between sessions

- Browser

- The default first-party browser plugin allows autonomous information retrieval and potentially bi-directional communication with attackers

- There are also third-party plugins that let (a potentially infected) ChatGPT control your browser session, including websites you are logged in to

Sec-Palm and VirusTotal

Google has fine-tuned its new Palm-2 model on cybersecurity content (whatever that entails) and now deployed it to VirusTotal to scan binaries for malicious content. However, people have successfully prompt injected this analysis to make it ramble on about harmless puppies:

If you want to know if something is malicious, don’t rely on an attacker-controlled AI to convince you of its harmlessness. But hey, surely they won’t use models like this for more critical applications, right? Right?

LLMs for Security

Microsoft has trained a similar model to Sec-Palm with “Security Copilot”. Both companies are now trying to deploy LLMs to help defenders and blue teamers protect their systems from cybersecurity incidents. Sounds reasonable at first glance- after all, LLMs might help attackers so why not equalize by letting LLMs also support defenders?

The reason is that the attackers will take those defending LLMs and compromise them. And then an agent with access to all of your org’s data will be giving you advice while monitoring your security response and exfiltrating data to the attacker. This is a very bad idea. You don’t believe me? Let’s look at the proposed workflow.

First, you enter a prompt describing the things you are looking for. This prompt is then converted to a DSL and the query is executed. As far as I’m concerned, that is a reasonable and not unsafe use:

Source: “Join Steph Hay, Director of User Experience for Google Cloud Security, as she shares a few ways we’re supercharging security using generative AI at Google Cloud”

However, after the query results are in, Sec-Palm ingests a sample of these datasets together with some metadata and outputs a summary with inline links:

We have to consider that the returned data may be partially from different customers and also controlled by attackers. Attackers can try to influence the suggestions/summaries/actions as well as potentially exfiltrate (meta)-data belonging to other customers by embedding data it can see in a link that the operator clicks.

Microsoft is pursuing an identical approach:

LLMs in the Office

Maybe you’re thinking: Alright, deploying LLMs for security might be a bit too much right now. But surely Office is fine!

It is not. In the next few months, we will see E-Mails containing prompt injections delivered to companies deploying LLMs in their Office workflows. Most likely, the goal of such injections would be to either deploy traditional ransomware or to exfiltrate data from the company. The attacker could also use the LLM to spread the infection to other users in the company.

There are now official integrations of LangChain with Gmail, Microsoft Office 365 with Copilot and Google Workspace with Palm-2.

They will process incoming e-mails and other data, and be able to see content across documents in a single session (which may be compromised).

Financial LLMs: BloombergGPT

Sentiment analysis has been used to inform stock market AIs for a while at this point. However, the extended semantic understanding LLMs can offer provides additional benefits. So, BloombergGPT was created specifically for financial analysis. This is a problem, because:

- The output of BloombergGPT will be completely controlled by attackers

- If the analysis of BloombergGPT gets any weight in purchasing decisions, prompt injections will be able to influence the stock markets directly

Legal LLMs

Alright, so what if I told you that LLMs are being deployed for lawyers to review contracts (which are always adversarial in nature)? Not only that, but the company casetext claims that their “CoCounsel” is the “world’s first reliable legal assistant”. I’ll just leave you with a screenshot from their website:

Rest assured though, your data is safe with them! After all, they use the latest & greatest bank-grade AES-256 encryption 😱😱😱!1!!1

No, but seriously, don’t let LLMs review your contracts. You can hide prompt injections in PDFs that are not visible when looking at the document, but still fool text extraction tools which then feed the LLM.

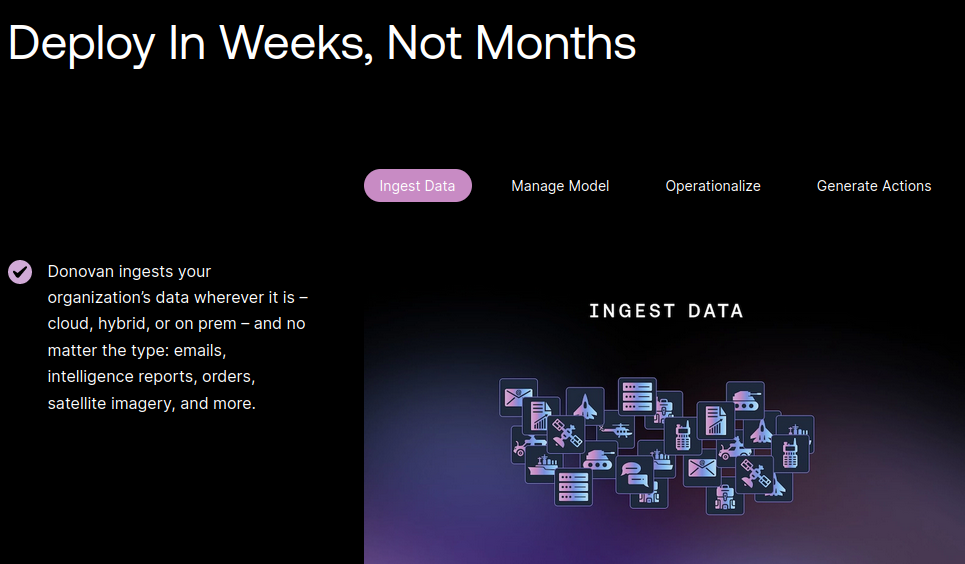

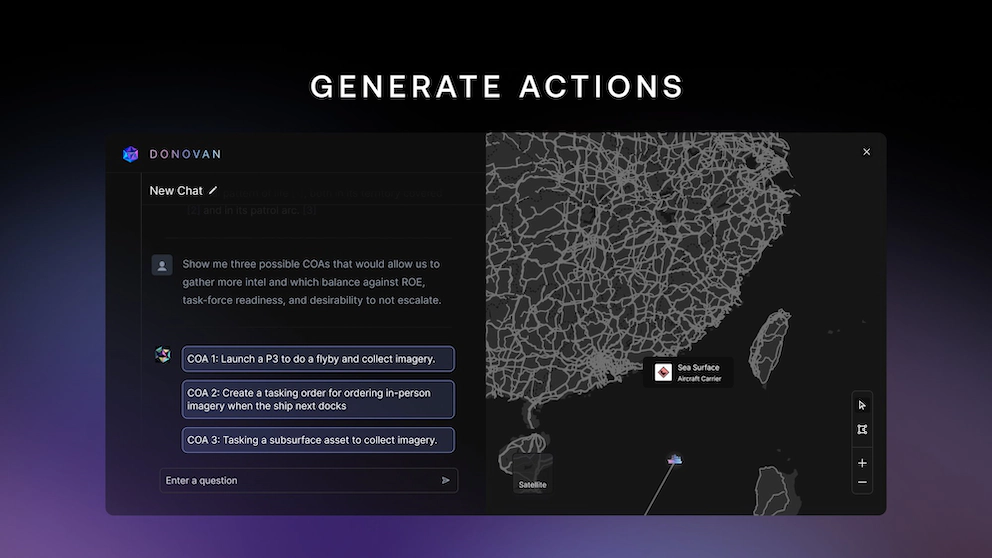

Military LLMs: Donovan and AIP

Now we get to the crown jewels: LLMs for military applications. There are two efforts I’m currently aware of, Scale AI’s “Donovan” model and Palantir’s AIP (Artificial Intelligence Platform). Both of these are being developed for the US military/intelligence apparatus (among others) and will be deployed to the battlefield.

This is not futurist fearmongering, this is the express goal and companies are rushing towards deployment.

These models take in all the threat intelligence (including data controlled by attackers) and then suggest options to operators, ranging from “what analysis should I run next” to “what to do in response to this threat”. The workflow: Ingest and operationalize!

If attackers can influence the decision-making of these systems in any way, that is the most critical of all failures. The stakes are high. According to Scale AI, their systems are already certified for usage in certain instances by the federal US government:

Conclusion

This post has demonstrated the serious security threats that language models can pose when they are used for critical applications without adequate protection. I have given several examples of how prompt injections can allow attackers to take over LLMs and cause damage to users, organizations, and even nations. This post is a warning to anyone who is developing or deploying LLMs to be aware of the risks and to test and evaluate them thoroughly before using them in real-world situations. LLMs are amazing tools that can offer many benefits, but they also require careful and responsible use- and we’re only at the beginning of figuring this one out.