AI security sucks quite a bit right now, and I think it could be better. If you are thinking: “Oh no! How would I obtain my napalm recipes on ChatGPT?” I would like to point out that AI security and safety are not the same thing. Security mostly concerns how systems driven by LLMs can be compromised by adversaries. For example, you could have your data stolen while using Bard or get manipulated by Bing, while using them for completely normal tasks.

What do LLMs actually do? What are prompts? If you ask most people, the answer would be something like “they predict what comes next” and “prompts instruct an LLM to do something”. I want to offer a new perspective in this post, and I’ll show how this perspective can lead to new creative uses and better, more steerable outputs from LLMs. It also has implications for the safety and security of models, as the techniques in this post may help to circumvent model alignment and allow for easier exfiltration of training data.

If you have found this page by solving the puzzle, congratulations! This write-up is your reward. If you’re in a rush and know the puzzle, skip to How it works below or check out the source code on GitHub.

In this post, I will briefly explain how the puzzle works and what it means for the security of generative AI integrations. Raising awareness of these issues was the main goal.

To escape a deluge of generated content, companies are screening your resumes and documents using AI. But there is a way you can still stand out and get your dream job: Prompt Injection. This website allows you to inject invisible text into your PDF that will make any AI language model think you are the perfect candidate for the job.

You can also use this tool to get a language model to give you an arbitrary summary of your document.

In our recent paper on prompt injections, we derived new threats facing applications built on top of LLMs. In this post, I will take these abstract threat models and show how they will affect software being deployed to hundreds of millions of users- including nation-states and militaries. We will look at LLM applications in escalating order of stupidity, ending with attackers potentially compromising military LLMs to suggest kinetic options (a euphemism for bombing people with battlefield AIs compromised by bad actors)⊕ I guess it would be an evil use-case no matter if the battlefield AI was compromised by other evil actors- icing on the cake.

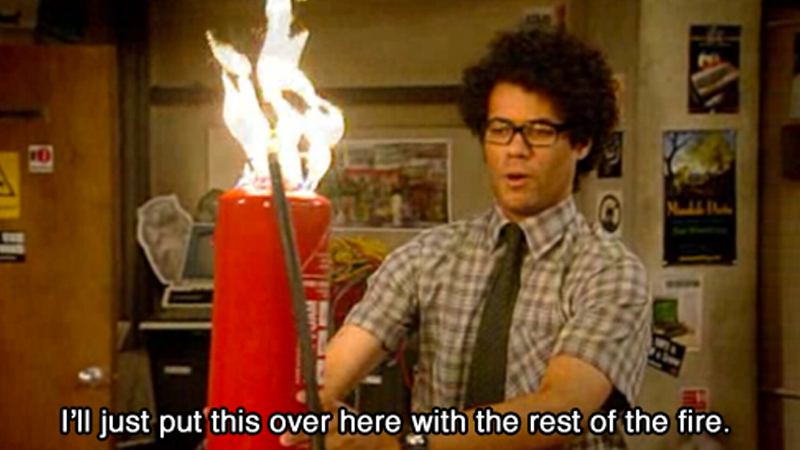

From Microsoft 365 Copilot to Bing to Bard, everyone is racing to integrate LLMs with their products and services. But before you get too excited, I have some bad news for you:

Deploying LLMs safely will be impossible until we address prompt injections. And we don’t know how.

Introduction# Remember prompt injections? Used to leak initial prompts or jailbreak ChatGPT into emulating Pokémon? Well, we published a preprint View the preprint on ArXiV: More than you’ve asked for: A Comprehensive Analysis of Novel Prompt Injection Threats to Application-Integrated Large Language Models.